Robotaxis that plug into your brain

PLUS: Unitree opens first physical store

Read Online | Sign Up | Advertise

Good morning, robotics enthusiasts. Your next Tesla or Waymo robotaxi might know exactly how you’re feeling — and adjust its driving to keep you calm.

Chinese researchers are testing cars that read passengers’ brain signals to slow down, stiffen, or smooth out the ride in real time. If it leaves the lab, expect AVs that finally listen to the humans inside, not just the sensors outside.

In today’s robotics rundown:

Self-driving cars now tap brain signals

Unitree’s first brick-and-mortar robot store

New synthetic skin lets robots feel pain

Robots just got night vision superpowers

Quick hits on other robotics news

LATEST DEVELOPMENTS

SELF-DRIVING VEHICLES

🧠 Self-driving cars now tap brain signals

Image source: Ideogram / The Rundown

The Rundown: Chinese researchers are testing self-driving software that reads passengers’ brain signals and automatically slows or stiffens the car’s behavior when riders feel stressed, boosting simulated safety and comfort over conventional systems.

The details:

Chinese researchers are experimenting with self-driving systems that tap passengers’ brain signals, using fNIRS headbands to monitor stress.

The system feeds these brain metrics into a deep reinforcement learning algorithm that dynamically adjusts the driving style.

Simulated tests showed faster learning curves, fewer close calls, and smoother rides versus standard AV controllers.

The study suggests that human physiological data could act as an extra safety channel for AVs, supplementing radar, lidar, and camera inputs.

Why it matters: Today's autonomous cars treat passengers like cargo — you get the ride the algorithm chose, anxiety be damned. Brain-in-the-loop driving flips that script, letting the vehicle sense fear and back off before you white-knuckle the armrest. If it works beyond the lab, expect AVs that build trust by actually listening to passengers.

UNITREE

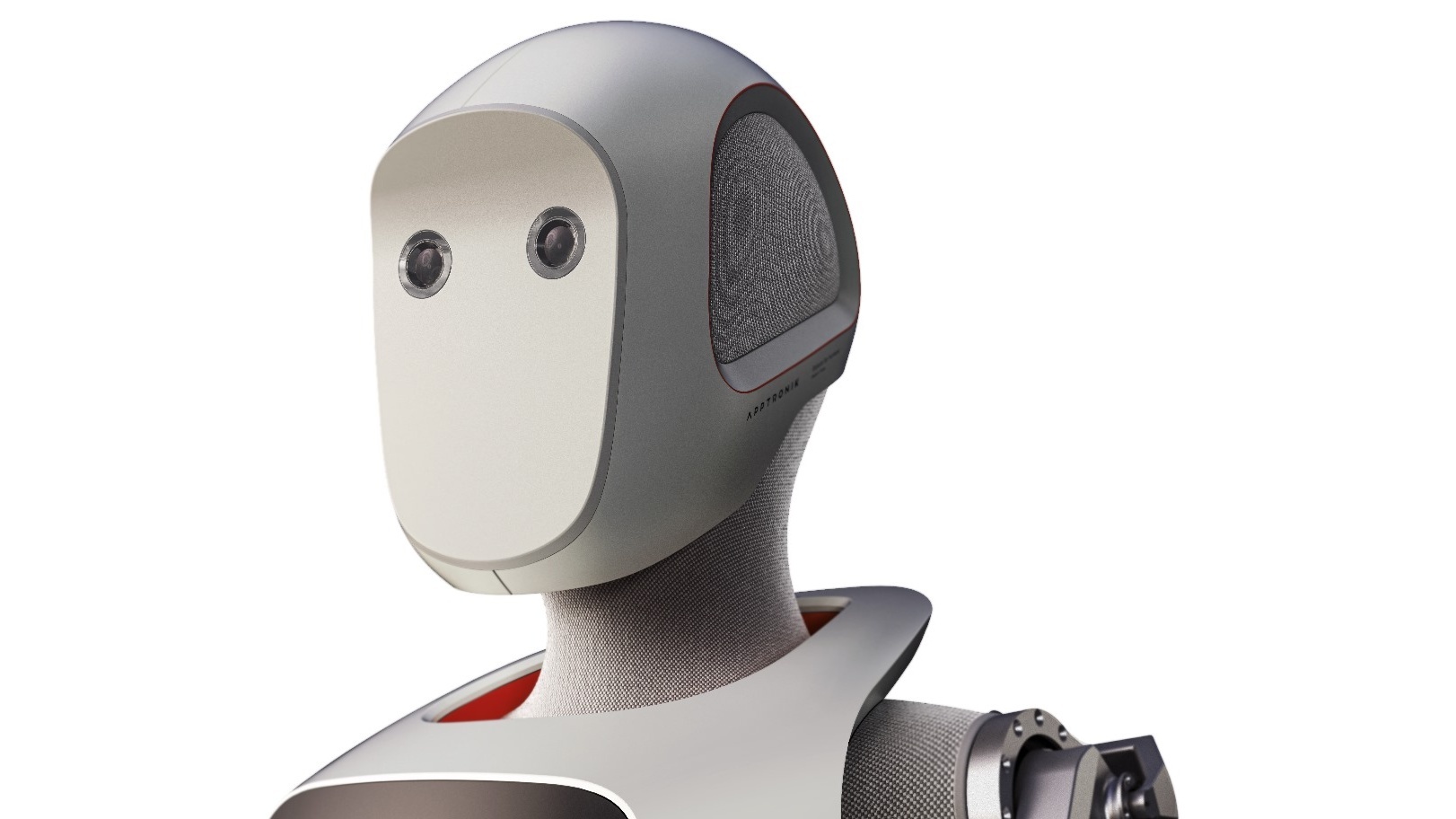

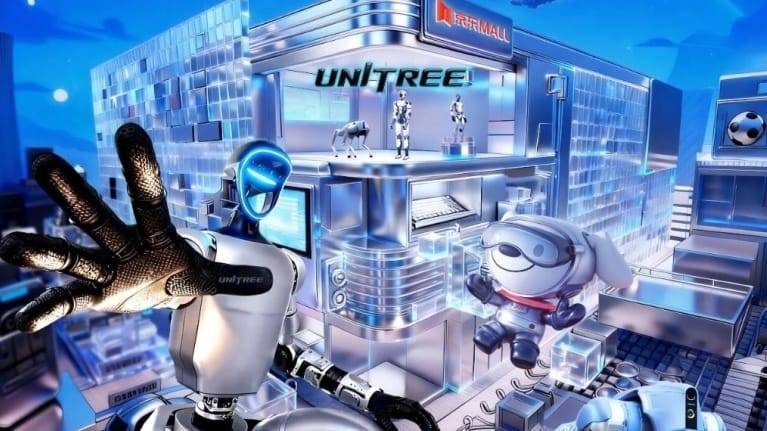

🛍️ Unitree’s first brick-and-mortar robot store

Image source: Unitree and JD.com

The Rundown: Chinese robotics giant Unitree just opened its first brick-and-mortar robot store today with JD.com at JD Mall in Beijing’s Shuangjing district, letting shoppers poke, prod, and purchase its humanoid and quadruped machines in person.

The details:

The store showcases Unitree’s G1 humanoid, Go2 quadruped, and other robots, which visitors can try in person before buying.

Customers can purchase on-site or scan a QR code to order through JD’s mini-program, with options for in-store pickup or JD home delivery.

Unitree logged about $129M in revenue in 2024, with its quadruped robots making up roughly 69.75% of global sales and 1,500 humanoids delivered.

Unitree sells the R1 for $5,117, the G1 for $12,683, and the H1 for $83,526, and has also launched a humanoid app store for tap-to-deploy motions.

Why it matters: Unitree’s first physical storefront marks a hard pivot into mainstream consumer retail for its popular robots. It shows how a fast-growing Chinese robotics player with millions in revenue, strong quadruped share, and IPO ambitions is building an ecosystem around app-downloadable robot skills instead of just pushing hardware.

CHINESE ROBOTICS

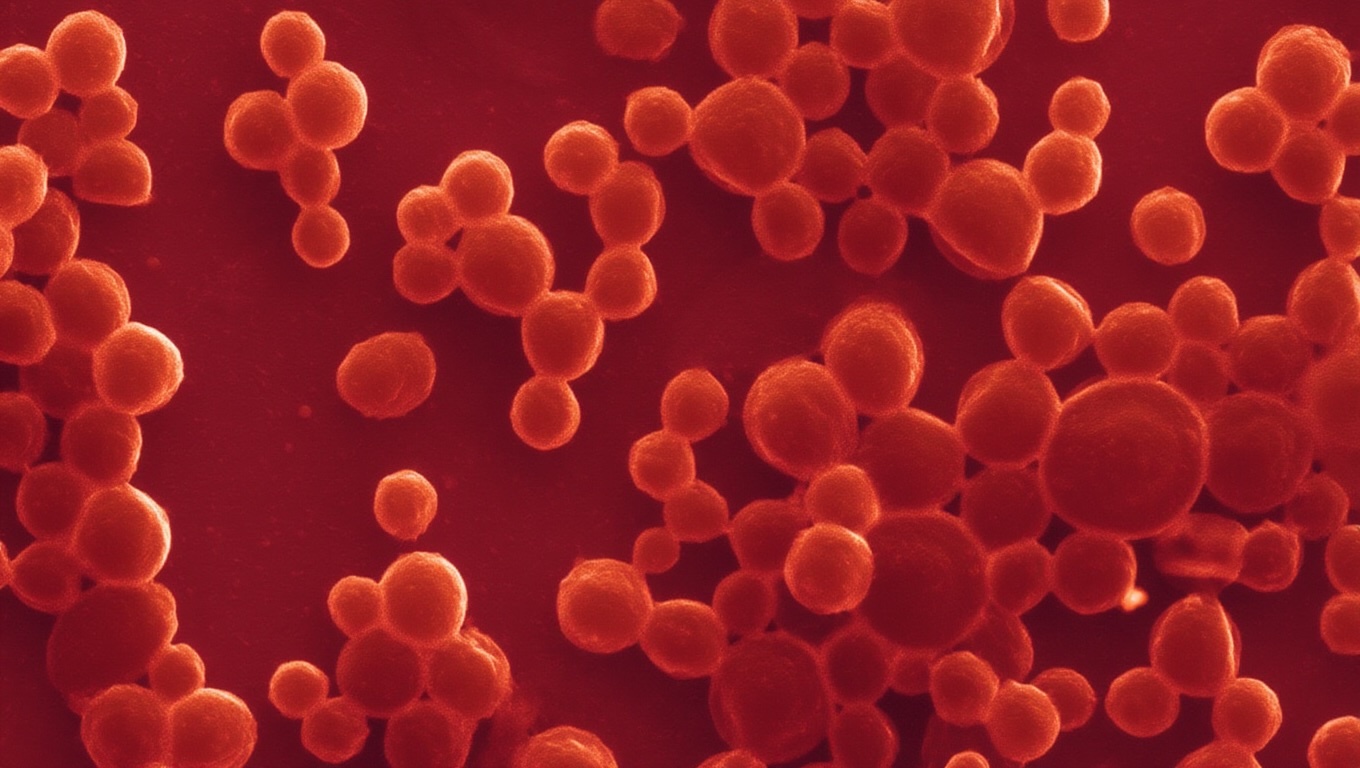

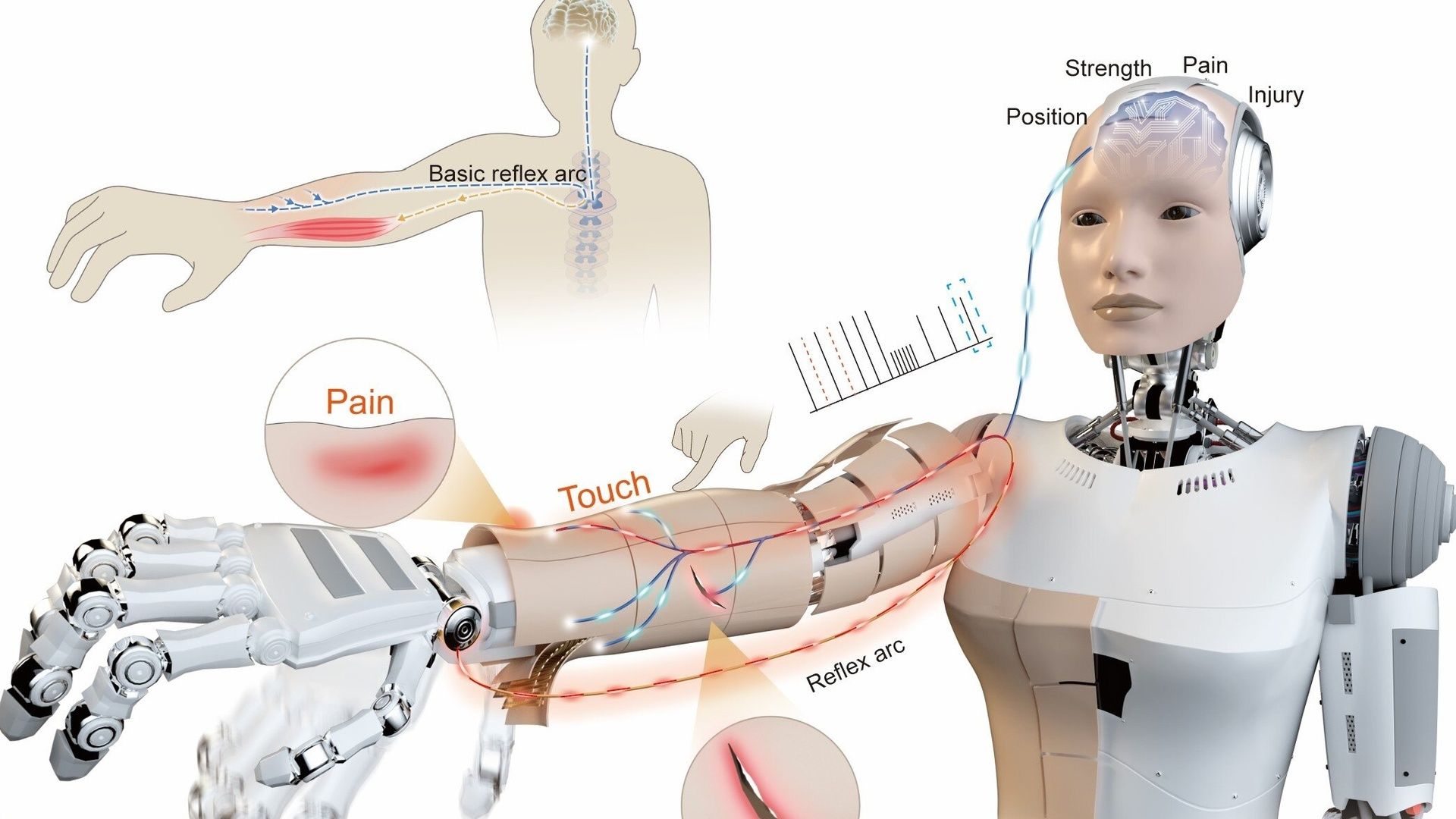

🔥 New synthetic skin lets robots feel pain

Image source: Xinge Yu, City University of Hong Kong

The Rundown: Chinese researchers have designed a neuromorphic “e-skin” that lets humanoids jerk away from danger before their central processor even notices — like yanking your hand off a hot stove.

The details:

The four-layer synthetic skin converts touch into spike-train pulses that mimic biological nerves.

Continuous low-level “heartbeat” signals let robots self-diagnose cuts and localize damage.

When pressure crosses a preset “pain” threshold, the system routes a high-voltage signal straight to the motors, triggering immediate reflex actions.

The e-skin is built from modular, magnetically attached patches that can be swapped out in seconds.

Why it matters: When pressure passes a preset “pain” threshold, the e-skin skips the robot’s main brain and snaps a signal straight to the motors, triggering an instant pullback like yanking an arm from a hot stove. The team now wants multi-touch capability so robots can track — and respond to — several contact points at once.

ROBOTICS RESEARCH

👁️ Robots just got night vision superpowers

Image source: The University of Manchester

The Rundown: Researchers at the University of Manchester have taught robots to see in total darkness by training a machine-learning model to reconstruct crisp, visible-light-style images from grainy infrared camera feeds.

The details:

The University of Manchester team has built CLEAR-IR, a machine-learning system that turns infrared camera feeds into clear, visible-light-like images.

The method slots in front of existing perception stacks, so robots can keep using their current vision and navigation algorithms without retraining.

The researchers say CLEAR-IR yields images sharp enough to give robots daylight-grade situational awareness when no visible light is present.

The team adds that the same technique could potentially work underwater or in extreme-heat environments where normal cameras fail.

Why it matters: CLEAR-IR turns any infrared-equipped bot into a night-vision machine without new hardware or retraining their vision stacks — unlocking search-and-rescue missions and underground infrastructure mapping. If the method scales, expect robots tackling disaster zones and industrial sites humans can’t safely enter.

QUICK HITS

📰 Everything else in robotics today

Chinese robotics firm UBTECH says it has hit a key milestone, rolling its 1,000th Walker S2 humanoid off the line at its Liuzhou manufacturing plant.

Amazon said it has dropped plans to launch drone deliveries in Italy, citing broader business regulatory hurdles despite progress with aviation authorities.

China’s cyber regulator issued draft rules for AI systems that emotionally interact with users, requiring providers to police addiction risks and protect data.

VinMotion, widely seen as Vietnam’s leading humanoid robotics startup, rolled out a second-generation version of its flagship Motion robot.

Schaeffler unveiled a production-ready planetary gear actuator — a compact in-house drive unit — designed specifically for humanoids.

A Nvidia-powered Unitree G1 humanoid told CNBC that “only time will tell” if the AI boom is a bubble, but predicted robots like it will soon move into more roles.

AI² Robotics unveiled ZhiCube, a modular “embodied AI” kiosk built around its AlphaBot2 that can swap coffee, ice cream, or retail modules for malls and parks.

COMMUNITY

🎓 Highlights: News, Guides & Events

Read our last AI newsletter: Meta’s next big AI bet: Manus

Read our last Robotics newsletter: World’s smallest autonomous robots

Read our last Tech newsletter: Meta buys AI startup Manus for $2B

Today’s AI tool guide: Design better websites with Cursor’s new editor

Watch our last live workshop: NotebookLM for Work

See you soon,

Rowan, Jennifer, and Joey—The Rundown’s editorial team

Stay Ahead on AI.

Join 2,000,000+ readers getting bite-size AI news updates straight to their inbox every morning with The Rundown AI newsletter. It's 100% free.