Google's upgrade breaks reasoning barriers

PLUS: How to generate a TV commercial with AI

Read Online | Sign Up | Advertise

Good morning, AI enthusiasts. OpenAI and Anthropic have been grabbing all the 2026 headlines — but Google just reminded everyone why it's still the biggest powerhouse in the AI race.

With an upgraded Deep Think obliterating benchmarks across math, coding, and science, and a new research agent autonomously solving open problems, the tech giant is pushing frontier AI for scientific research into uncharted territory.

In today’s AI rundown:

Google's Deep Think crushes reasoning benchmarks

OAI launches ultra-fast coding model on Cerebras chips

How to generate a TV commercial with AI

MiniMax's open-source M2.5 hits frontier coding levels

4 new AI tools, community workflows, and more

LATEST DEVELOPMENTS

⚡Google's Deep Think crushes reasoning benchmarks

Image source: Google

The Rundown: Google just released a major update to its Gemini 3 Deep Think reasoning mode, posting dominant scores across math, coding, and science — while also introducing its Olympiad-level math research agent driven by the new upgrade.

The details:

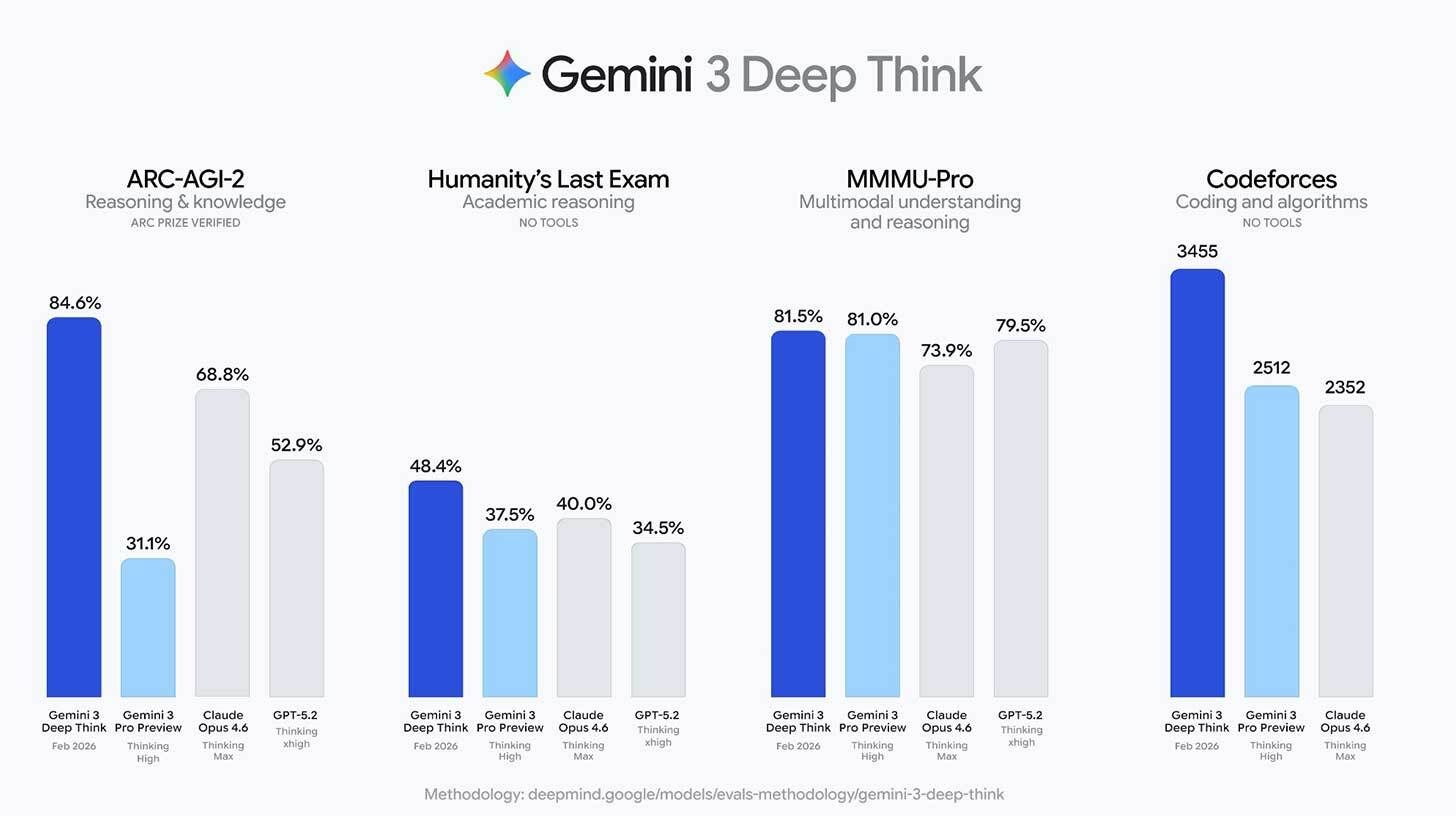

Deep Think hit 84.6% on ARC-AGI-2, obliterating Opus 4.6 (68.8%) and GPT-5.2 (52.9%), and set a new high of 48.4% on Humanity's Last Exam.

It also reached gold-medal marks on the 2025 Physics & Chemistry Olympiads and scored a 3,455 Elo on Codeforces, nearly 1,000 points above Opus 4.6.

Google also unveiled Aletheia, a math agent that autonomously solves open problems, verifies proofs, and hits new highs across domain benchmarks.

The Deep Think upgrade is live for Google AI Ultra subscribers in the Gemini app, with API access open to researchers via an early access program.

Why it matters: After Google dominated benchmarks and headlines to close 2025, the focus has been more on Anthropic and OpenAI in 2026 — but don’t forget about the tech giant as arguably the biggest powerhouse in the AI race. Deep Think’s scores are wild, and the frontier for math and science is quickly moving into uncharted territory.

TOGETHER WITH VOXEL51

💸 Stop wasting 95% of your data labeling budget

The Rundown: Most teams are labeling massive amounts of data that never gets used for model training. Voxel51's technical workshop on Feb. 18 shows how to build feedback-driven annotation pipelines that eliminate over-labeling — saving time and money while improving model performance.

Join the workshop and learn:

How to use zero-shot selection and embeddings for maximum cost savings

QA workflows to review specific objects and fix errors fast

How to implement dedicated test sets to catch label drift early

Debugging with embeddings to visualize the clusters confusing your model

OPENAI

⚡ OAI launches ultra-fast coding model on Cerebras chips

Image source: OpenAI

The Rundown: OpenAI released GPT-5.3-Codex-Spark, a new speed-optimized coding model that runs on Cerebras hardware, cranking out 1,000+ tokens per second and marking the company's first AI product powered by chips beyond its Nvidia stack.

The details:

Spark trades intelligence for speed, trailing the full 5.3-Codex on SWE-Bench Pro and Terminal-Bench but finishing tasks in a fraction of the time.

The release comes just weeks after OAI inked a $10B+ deal with Cerebras and separate agreements with AMD and Broadcom, diversifying away from Nvidia.

OAI's vision is for Spark to handle quick interactive edits while the full Codex tackles longer autonomous tasks in the background.

The model is rolling out as a research preview for ChatGPT Pro subs, with API access initially limited to a handful of enterprise design partners.

Why it matters: Codex's main criticism has been its speed, and OpenAI just addressed it in a big way — while making its chip diversification play real with the first product built on Cerebras hardware. Real-time coding with instant feedback will definitely change workflows for development tasks that are able to compromise a bit of power for speed.

AI TRAINING

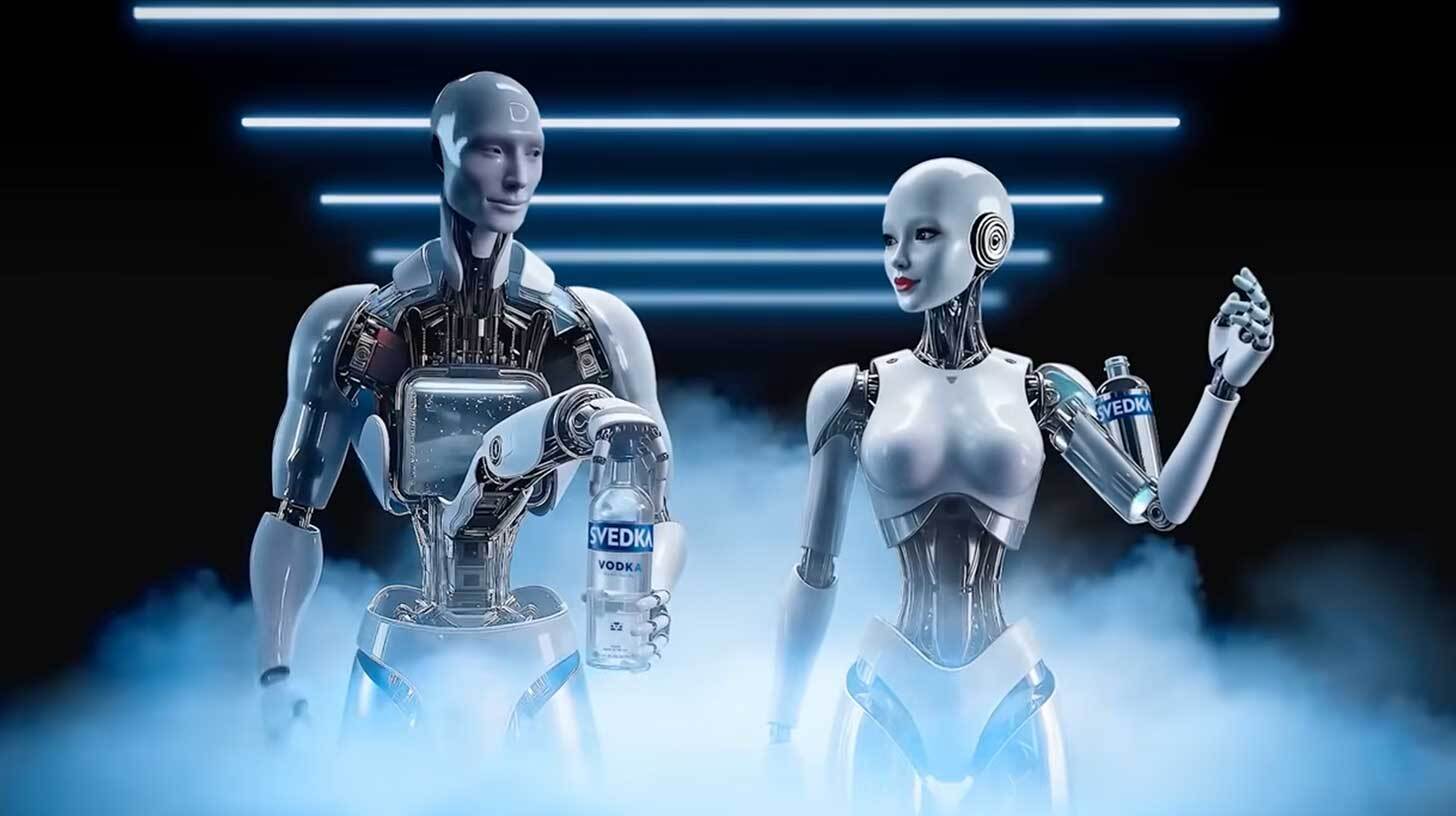

📺 How to generate a TV commercial with AI

The Rundown: In this guide, you will learn to generate a 20-second ad in the style of a professional TV commercial — taking the guesswork out of outputs without needing to click and pray.

Step-by-step:

Think of a commercial idea and ask Gemini to plan out two 5s scenes. Once done, ask it to write prompts for the start and end frames of both scenes.

Now, log in to Higgsfield (you will need a basic/pro plan) and click Image > Create Image > Nano Banana Pro. Set 4k quality, 4 variations, and 21:9 ratio.

Generate the start + end frame for scene 1 and just the end frame for scene 2. Download the ones you like best.

In Higgsfield, go to Video > Kling 3.0, upload your frames with the short scene prompt, and hit generate. After this, stitch the videos in a free editor.

Pro tip: Ask Gemini to use photography terms like “Hero shot” when generating scene prompts. You can also generate music for the ad with Suno + Eleven Labs.

PRESENTED BY CDATA

🏗️ Build secure agentic AI that scales

The Rundown: Microsoft and CData are teaming up for a live 45-minute session on how to design secure, scalable agentic infrastructure using Copilot Studio, Agent 365, and CData's Connect AI — including a live cross-system workflow demo.

In this session, you'll learn:

How Microsoft and CData deliver connectivity, context, and control for production AI agents

Agent design and production best practices from both teams

How a Copilot Studio agent syncing with Salesforce and Dynamics 365 is built and deployed

Register here for the session. All registrants will receive the session recording.

MINIMAX

💰 MiniMax's open-source M2.5 hits frontier coding levels

Image source: MiniMax

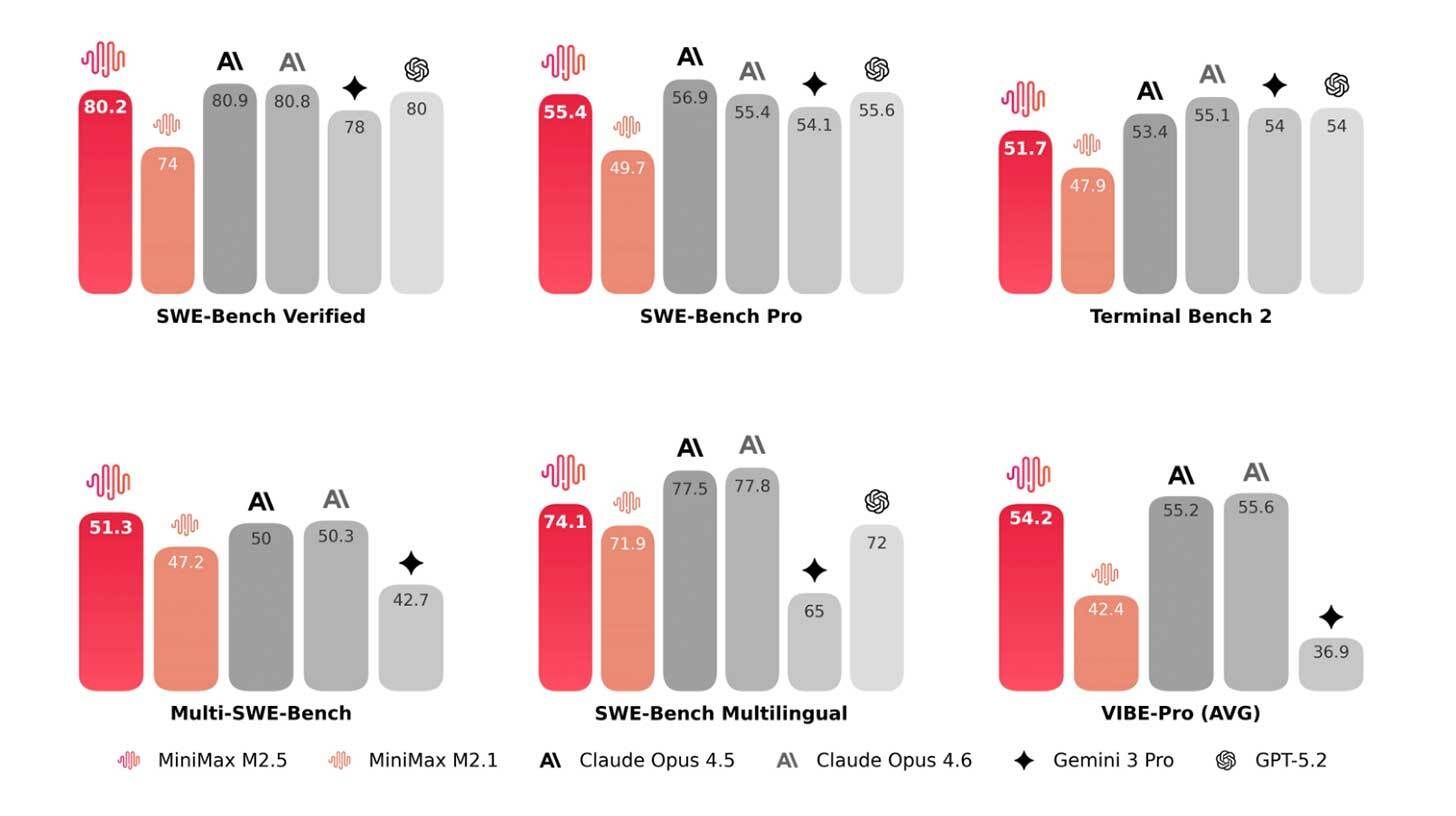

The Rundown: Chinese AI lab MiniMax launched M2.5, an open-source model that rivals Opus 4.6 and GPT-5 on agentic coding benchmarks — but at a fraction of the cost, making it cheap enough to power AI agents running around the clock.

The details:

M2.5 shows especially strong coding performance, scoring roughly even with Opus 4.6 and GPT-5.2 across key development benchmarks.

Two APIs are available: a faster M2.5-Lightning ($2.40/M output) and a standard M2.5 ($1.20/M output), both priced much lower than Opus ($25/M).

MiniMax revealed that M2.5 now handles 30% of daily company tasks across R&D, product, sales, HR, and finance, as well as 80% of new code commits.

The models are available via API, though the open-source weights and license have yet to be published.

Why it matters: Every few months, it feels like a Chinese lab drops a model that changes the cost math for the entire industry. M2.5’s frontier-level coding at this price makes "intelligence too cheap to meter" feel closer than ever, an important development as agents handling longer autonomous tasks become more common.

QUICK HITS

🛠️ Trending AI Tools

🔒 Incogni - remove your personal data from the web so scammers and identity thieves can’t access it. Use code RUNDOWN to get 55% off.*

🧠 Gemini 3 Deep Think - Google's upgraded AI reasoning mode

⚡️ GPT-5.3-Codex-Spark - OpenAI’s ultra-fast model for real-time coding

🤖 M2.5 - Minimax’s new open-source frontier model with powerful coding

*Sponsored Listing

📰 Everything else in AI today

ByteDance officially launched Seedance 2.0, the company’s viral SOTA video model, publishing benchmark results and a technical blog, but access still remains restricted.

Mustafa Suleyman told FT that most white-collar work will be "fully automated by AI within 12 to 18 months," with Microsoft pursuing "true self-sufficiency" with its models.

Elon Musk said that xAI's wave of departures was forced, not voluntary — calling it a reorg for "speed of execution" after losing ten co-founders and engineers this week.

OpenAI is retiring GPT-4o, GPT-4.1, and o4-mini from ChatGPT today, coming amid pushback from users calling for 4o’s preservation.

Anthropic officially announced a new $30B funding round at a $380B valuation, with its revenue run rate hitting $14B — $2.5B of which comes from Claude Code alone.

OAI researcher Zoë Hitzig resigned after the launch of ChatGPT ads, warning OAI’s archive of human thought creates “unprecedented potential for manipulation.”

COMMUNITY

🤝 Community AI workflows

Every newsletter, we showcase how a reader is using AI to work smarter, save time, or make life easier.

Today’s workflow comes from reader Anthony H. in Australia:

"I needed a QR code scanner to check in our members at regular meetings to be used on an iPad. I couldn't find a good solution that wasn't expensive or bloated with extra features not needed. So, I created my own with Google AI Studio, GitHub, and Vercel.

It features event session creation, member profiles, auto-create custom QR codes for each member, and a system backup, as the data is held locally due to privacy. I added bulk import and export functions. Reports can be created that we need for our funding requirements as well."

How do you use AI? Tell us here.

🎓 Highlights: News, Guides & Events

Read our last AI newsletter: xAI’s next phase unleashed

Read our last Tech newsletter: Musk’s ‘self-growing’ Moon city

Read our last Robotics newsletter: Apptronik’s $935M humanoid moment

Today’s AI tool guide: How to generate a TV commercial with AI

RSVP to next workshop on Feb. 18: Agentic Workflows Bootcamp pt.2

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer — the humans behind The Rundown

Stay Ahead on AI.

Join 2,000,000+ readers getting bite-size AI news updates straight to their inbox every morning with The Rundown AI newsletter. It's 100% free.