Nvidia strikes largest deal in company history

PLUS: Perform real-time market research using Grok

Read Online | Sign Up | Advertise

Good morning, AI enthusiasts. Hope you had a happy holiday — and as expected, there was no shortage of AI news over our week-long break.

It was a particularly sweet one for Jensen Huang, with Nvidia dropping $20B to license Groq’s speedy AI chips, while also bringing the creator of Google’s rival TPUs on board in the process.

In today’s AI rundown:

Nvidia licenses Groq tech in $20B deal

The Rundown Roundtable: Our AI use cases

Perform real-time market research using Grok

Z.ai’s GLM-4.7 tops open-source benchmarks

4 new AI tools, community workflows, and more

LATEST DEVELOPMENTS

NVIDIA & GROQ

💰 Nvidia licenses Groq tech in $20B deal

Image source: Reve / The Rundown

The Rundown: Nvidia just struck a licensing deal reportedly worth $20B with AI chip startup Groq, with the company's CEO and president also joining the chip giant to help integrate and scale the tech.

The details:

The deal targets Groq's LPU chips, which specialize in running AI models quickly and cheaply — claiming 10x speed at a fraction of GPU energy use.

Groq was valued at $6.9B just 3 months ago after raising $750M from investors like BlackRock, Samsung, and Cisco.

Groq CEO Jonathan Ross and President Sunny Madra will join Nvidia as the startup continues independently under CFO Simon Edwards.

Ross previously helped create Google's TPU chips before founding Groq in 2016, with the $20B deal becoming the largest in Nvidia’s history.

Why it matters: Groq’s Ross left Google after helping create the TPU chips that now compete directly with Nvidia's GPUs — and a decade later, Nvidia is bringing him back into the fold. As custom silicon from Google and Amazon chip away at its lead, Nvidia seems to now be playing defense by stockpiling the talent behind it.

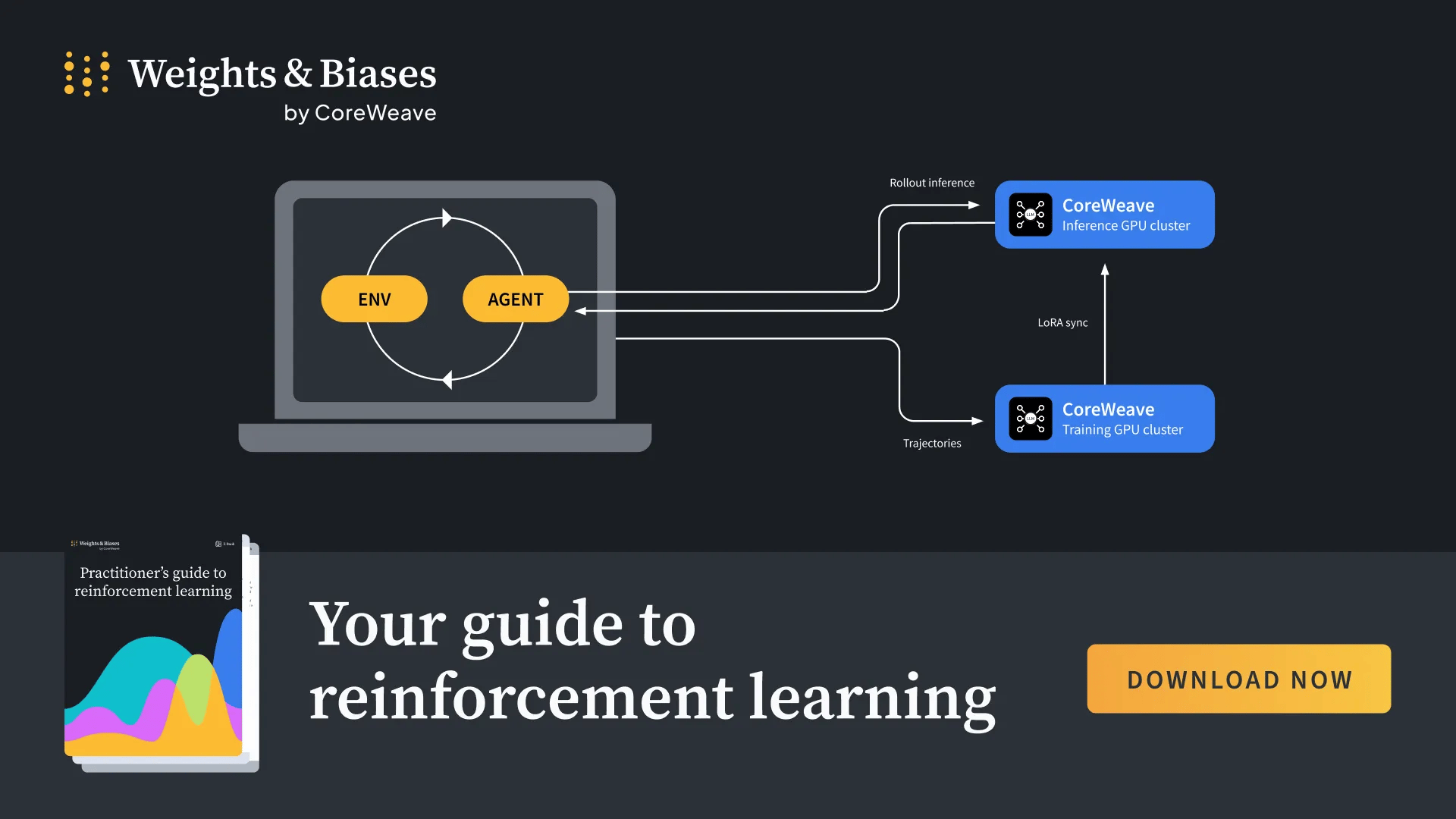

TOGETHER WITH WEIGHTS & BIASES

📙 Practitioner’s guide to post-training LLMs with RL

The Rundown: Need to get caught up quickly on how to post-train LLMs on agentic tasks using reinforcement learning (RL)? This practitioner's guide covers RL's origins, benefits teams can realize by incorporating RL for post-training, and how to get started.

Get the guide to learn:

The role of RL in post-training agents and how it compares to SFT

How LoRA makes fine-tuning more efficient

Key benefits and use cases of RL in production workloads

Using Serverless RL from Weights & Biases to kickstart your RL jobs in minutes

Download the Practitioner's guide to reinforcement learning.

THE RUNDOWN ROUNDTABLE

💡 The Rundown Roundtable: Our AI use cases

Image source: Ideogram / The Rundown

The Rundown: The Rundown Roundtable is a weekly feature in which we poll members of The Rundown staff about how we use AI in our work and daily lives.

Jennifer, Tech & Robotics Writer: I live in Europe, but I love using American recipes for Christmas cookies and some desserts. So I paste recipes into ChatGPT and ask it to convert all the measurements from cups to grams and ounces to milliliters, etc. I used to do this all manually, which took literally forever.

Shubham, Editor: I skipped the expensive travel agency playbook and planned my Singapore–Malaysia trip for these holidays with ChatGPT instead — with a broad layout at first and then detailed versions day-by-day. It mapped out complete plans, transport routes, ticket logic, food options (preferred Indian), and pacing without pushing generic packages or wasting time on filler attractions.

Jason, Developer: ChatGPT helped me find a perfect Magic: The Gathering card for $50 with historical significance for my brother, who is into that thing. He loved it. I got to pretend like I did a bunch of research when it actually took 15 seconds. Win-win.

AI TRAINING

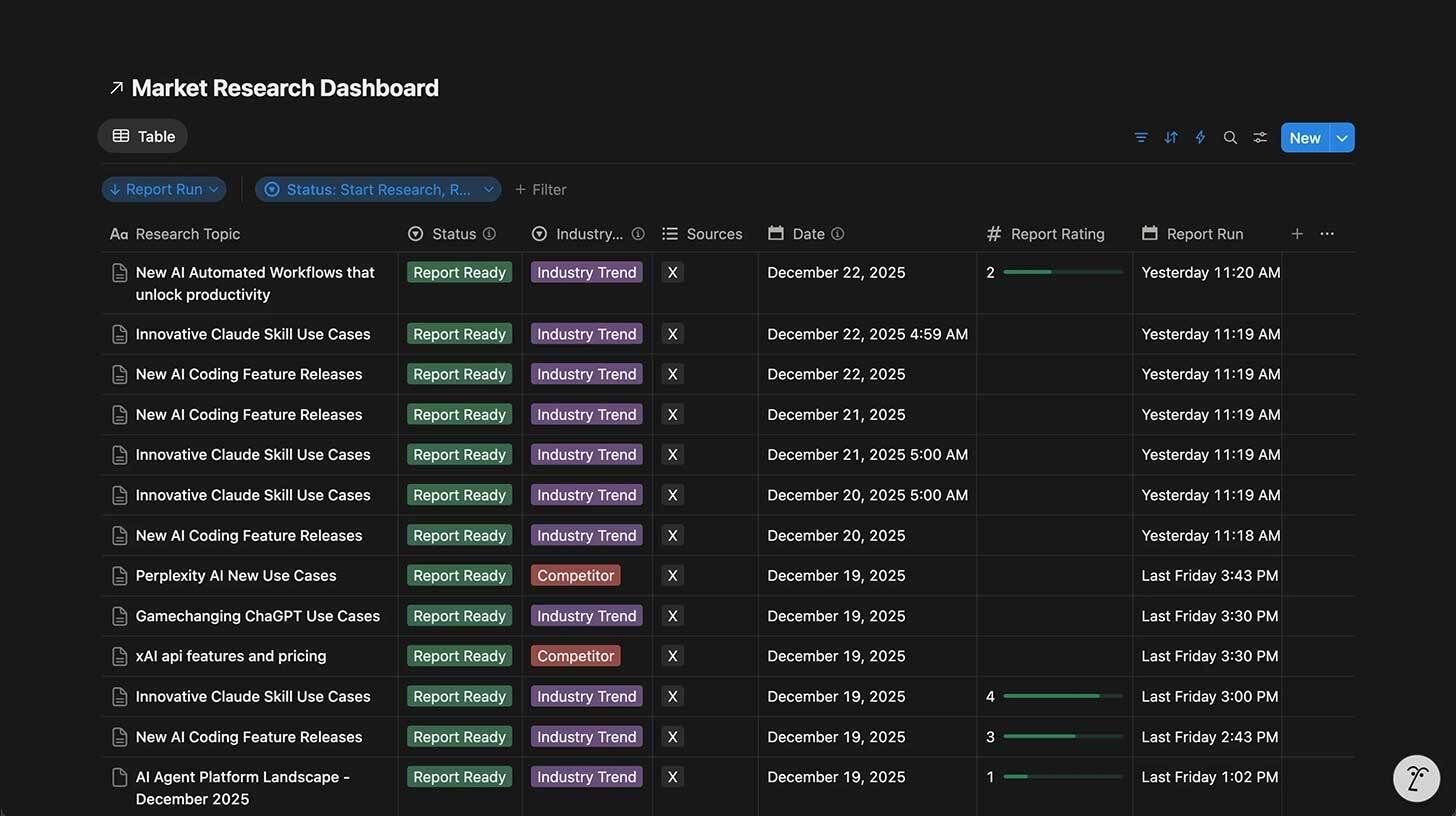

🧪 Perform real-time market research using Grok

The Rundown: In this tutorial, you will learn how to track Twitter trends and news stories from the last 24 hours with xAI's Grok, then automate daily research memos in Notion using Make for any trend or competitor.

Step-by-step:

Copy the Notion Database Template, then go to console.x.ai, create an API key, and fund your xAI account with at least $5

Download the Make blueprint file, log in to Make.com, create a new scenario, click the three dots → Import Blueprint, upload the JSON file, and save

Connect accounts: click the xAI Module 2 and add your API key, then click the Notion Module 3 and authorize access to your page from step 1

Set up the webhook: copy the webhook URL from Make's Webhook Module 1, go to Notion, click the lightning bolt → "Webhook trigger on new," replace the URL, and set it to trigger when Status = "Start Research"

Test by creating a new database row, filling out Research Topic, Date, Industry/Competitor, and Sources, then setting Status to "Start Research”

Pro tip: Set up a trigger that creates a new research memo on the same topic each day. You can send the webhook from this trigger, or manually flip it on to save tokens.

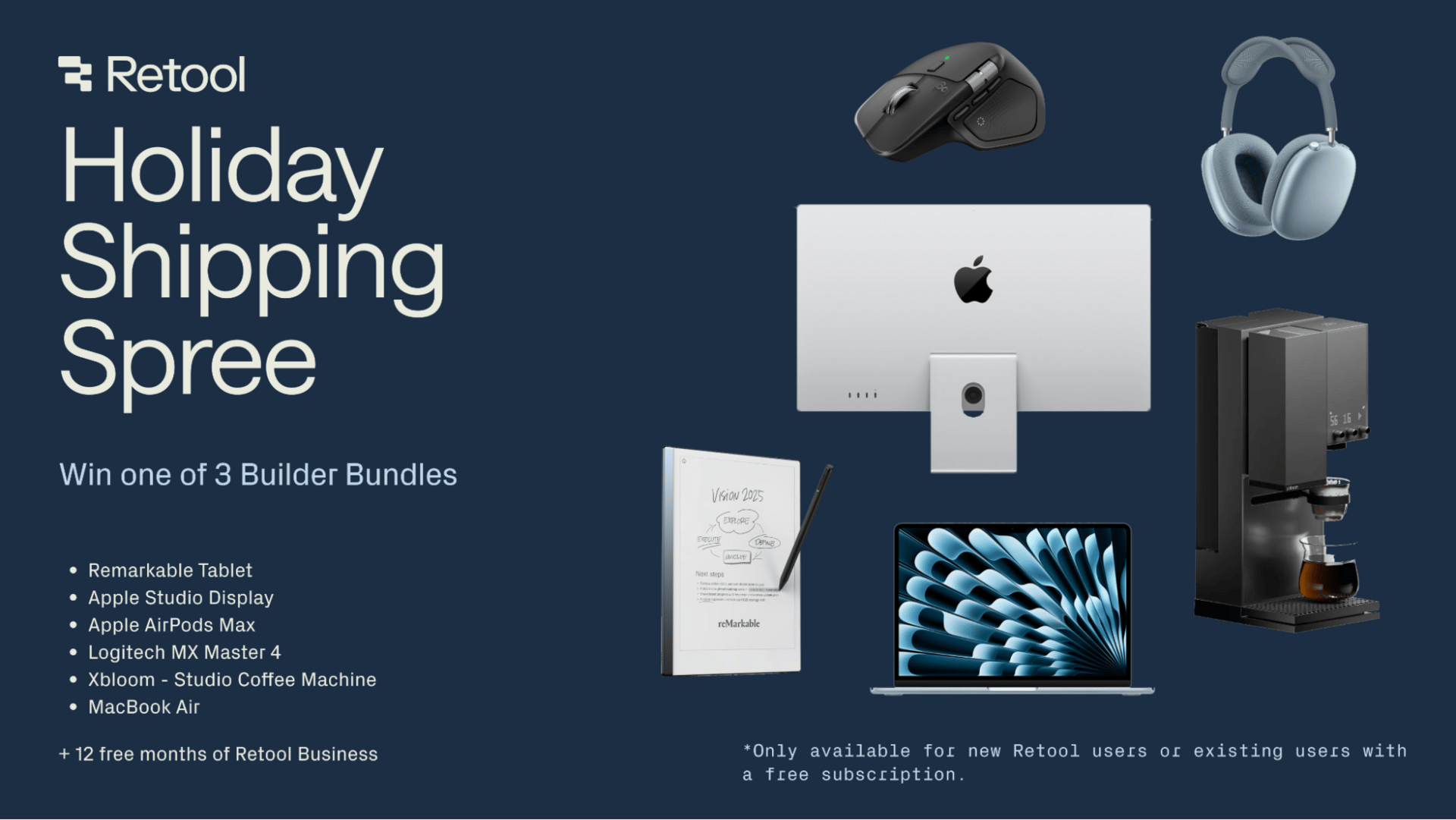

PRESENTED BY RETOOL

🏆 Can AI actually ship production apps?

The Rundown: Retool's Holiday Shipping Spree challenges you to build a real, deployable app using AI-assisted development — and compete for a year of Retool Business plus thousands in builder gear.

Here's how it works:

Get a free month of Retool Business with unlimited AI prompting credits

Build production-ready apps using natural language with AI AppGen

Win a MacBook Air, Apple Studio Display, AirPods Max, and more

Z AI

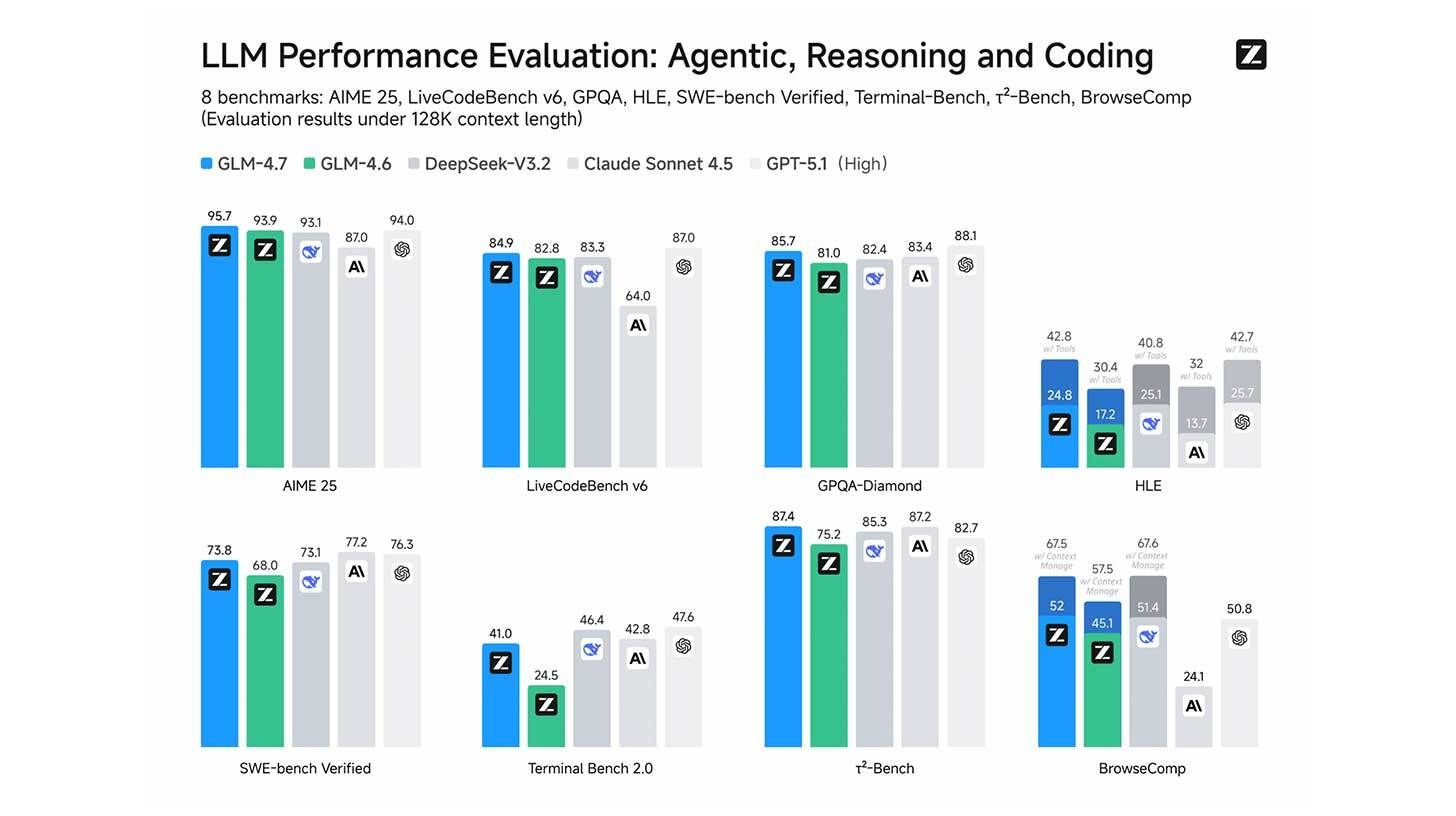

🇨🇳 Z.ai’s GLM-4.7 tops open-source benchmarks

Image source: Z.ai

The Rundown: Chinese AI startup Z.ai just released GLM-4.7, a coding-focused model that tops benchmarks for open-source systems and matches models from top Western rivals — launching just days before its expected Hong Kong listing.

The details:

GLM-4.7 achieves a 73.8% on SWE-bench, making Z AI the first Chinese lab to break 70% on the real-world coding benchmark.

4.7 surpasses open rival DeepSeek-V3.2 across a range of agentic, reasoning, and coding benchmarks, also topping Kimi K2 and Claude Sonnet 4.5.

Z open-sourced the model weights on Hugging Face, with GLM-4.7 also available to use with coding agents like Claude Code.

The Alibaba-backed startup passed Hong Kong IPO hearings last weekend, with a raise of $300M expected next month.

Why it matters: China continues to release highly competitive open-source models at a pace that’s hard to ignore. With those companies also getting an injection of funding and potentially opening access to more advanced AI chips, 2026 may be the year we see a Chinese frontier-level open release catch the top Western leaders.

QUICK HITS

🛠️ Trending AI Tools

🤖 GLM-4.7 - Z.ai's new SOTA open-source model

🚀 MiMo-V2-Flash - Xiaomi's powerful open-weights reasoning model

⚙️ GPT-5.2-Codex - OpenAI’s new top agentic coding model

🔊 SAM Audio - Meta’s model to separate sounds using text prompts

📰 Everything else in AI today

Honeycomb examines how accurate AI Agents really need to be. Read the blog to learn why speed, iteration, and self-correction matter more than perfection.*

AI evaluation firm METR posted a new analysis of Claude Opus 4.5, finding it capable of tasks requiring nearly 5 hours of work — the longest duration of any model to date.

Cursor announced the acquisition of code review platform Graphite, with plans to integrate the tool into its AI-powered code editor.

OpenAI CEO Sam Altman posted a job listing for a ‘Head of Preparedness’ to plan for and secure increasingly advanced models, including “systems that can self-improve”.

Alibaba-backed MiniMax released M2.1, a model with powerful capabilities across a variety of programming languages and for mobile and web app development.

Poetiq published an analysis of its system on the ARC-AGI-2 benchmark running GPT 5.2 X-High, surpassing 70% and scoring the highest of any model by around 15%.

*Sponsored Listing

COMMUNITY

🤝 Community AI workflows

Every newsletter, we showcase how a reader is using AI to work smarter, save time, or make life easier.

Today’s workflow comes from reader Mike in Appleton, WI:

"I am using Windsurf to code an inventory tracking app for a non-profit organization. I am donating my time, and am a back-end developer who only dabbled in front-end development until 2023, when OpenAI released ChatGPT. I can now code professional full-stack apps in days instead of months. This is really a game-changer to all of us devs that only dreamed of creating this stuff without having to spend months and even years getting up to speed."

How do you use AI? Tell us here.

🎓 Highlights: News, Guides & Events

Read our last AI newsletter: AI giants join forces on Genesis Mission

Read our last Tech newsletter: OpenAI eyes $830B mega-valuation

Read our last Robotics newsletter: Top 5 robotics trends this year

Today’s AI tool guide: Perform real-time market research using Grok

Watch our last live workshop: NotebookLM for Work

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer — the humans behind The Rundown

Stay Ahead on AI.

Join 2,000,000+ readers getting bite-size AI news updates straight to their inbox every morning with The Rundown AI newsletter. It's 100% free.