Google's AI builds playable worlds in real time

PLUS: OpenAI releases its highly anticipated open weights model

Read Online | Sign Up | Advertise

Good morning, AI enthusiasts. The age of embodied AI is all about training in simulations…But what happens when some scenarios are too hard to build, or even imagine?

Google’s new Genie 3 just cracked that problem with the ability to generate rich, playable environments that evolve in real-time as AI agents (or users) explore them — unlocking a whole new frontier of infinite training.

Share your input: We’re committed to continuously improving The Rundown, and we’d love your feedback to help shape its future.

In today’s AI rundown:

Google’s Genie 3 interactive world model

OpenAI finally launches open-source models

Turn any document into study video presentations

Anthropic releases Claude Opus 4.1

4 new AI tools & 4 job opportunities

LATEST DEVELOPMENTS

GOOGLE DEEPMIND

🌍 Google’s Genie 3 interactive world model

Image source: Google DeepMind

The Rundown: Google DeepMind just announced Genie 3, a new general-purpose world model that can generate interactive environments in real-time from a single text prompt, complete with surrounding and character consistency.

The details:

With Genie 3, users can generate unique, 720p environments with real-world physics and explore them in real-time, with new visuals emerging at 24fps.

The model’s visual memory goes up to one minute, enabling it to simulate the next scene while ensuring consistency with the previous ones.

To achieve this level of controllability, Google says, Genie computes relevant information from past trajectories multiple times per second.

It also allows users to change the worlds as they go by inserting new characters, objects, or changing the environment dynamics entirely.

Why it matters: Genie 3’s consistent worlds, generated frame-by-frame in response to user action, isn’t just a leap for gaming and entertainment. They lay the foundation for scalable training of embodied AI, where machines can tackle the “what if” scenarios — like a path vanishing — by adapting in real time, just like humans.

TOGETHER WITH SŌKOSUMI

🤖 The first open marketplace for AI agents

The Rundown: Sōkosumi is the first open marketplace for autonomous AI agents, letting you hire specialized AI co-workers for content, research, design, and data tasks with just a single click. Built for professionals and enterprise teams who need results — not subscriptions.

The platform offers:

MCP ready, multi-model agents (ChatGPT, DeepSeek, Mistral)

Enterprise-grade security with GDPR compliance and SSO integration

Pay-per-task pricing — no monthly subscriptions or hidden fees

Build your AI agent team today — redeem $100 in free credits with code RUN100.

OPENAI

🧠 OpenAI finally launches open-source models

Image source: OpenAI

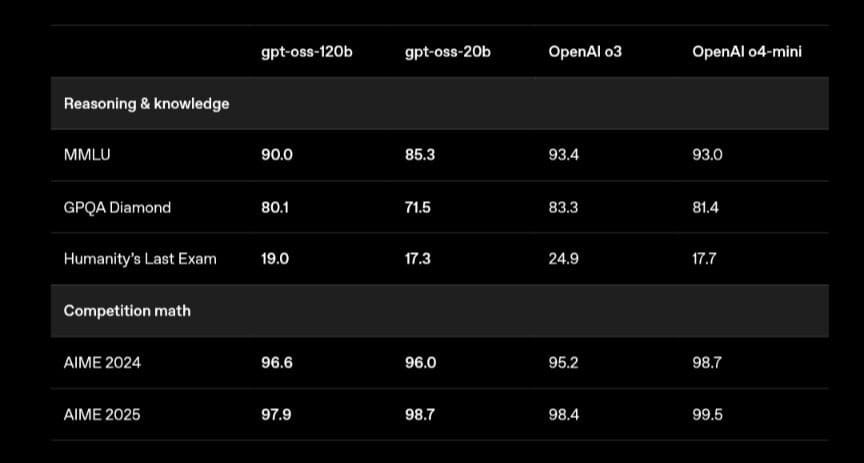

The Rundown: OpenAI unveiled gpt-oss-120b and gpt-oss-20b, its long-awaited open-weight reasoning LLMs that match or exceed o4-mini and o3-mini in performance and are available for local deployment under an Apache 2.0 license.

The details:

Available under Apache 2.0, the gpt-oss family, OpenAI’s first open LLMs since GPT-2 in 2019, instantly became #1 among 2M models on Hugging Face.

The 120B variant performs on par with o4-mini on core benchmarks and exceeds on certain domains, while being deployable on an 80GB GPU.

Meanwhile, the smaller 20B version is competitive with o3-mini, with suitability for local deployment on laptops with 16GB of memory.

Both models feature adjustable reasoning (high, medium, low) and can handle agentic workflows, with function calling, web search, and Python execution.

Why it matters: After keeping its best models locked for years, OpenAI is finally living up to its name, giving developers access to near-frontier reasoning models they can run and modify in their own environments. It’s a major boost for the open-source ecosystem, which has been rapidly closing the gap with closed models.

From the community: We’re hosting a workshop this Friday on the key benefits of local models and how you can run gpt-oss models on your computer.

AI TRAINING

🎥 Turn any document into study video presentations

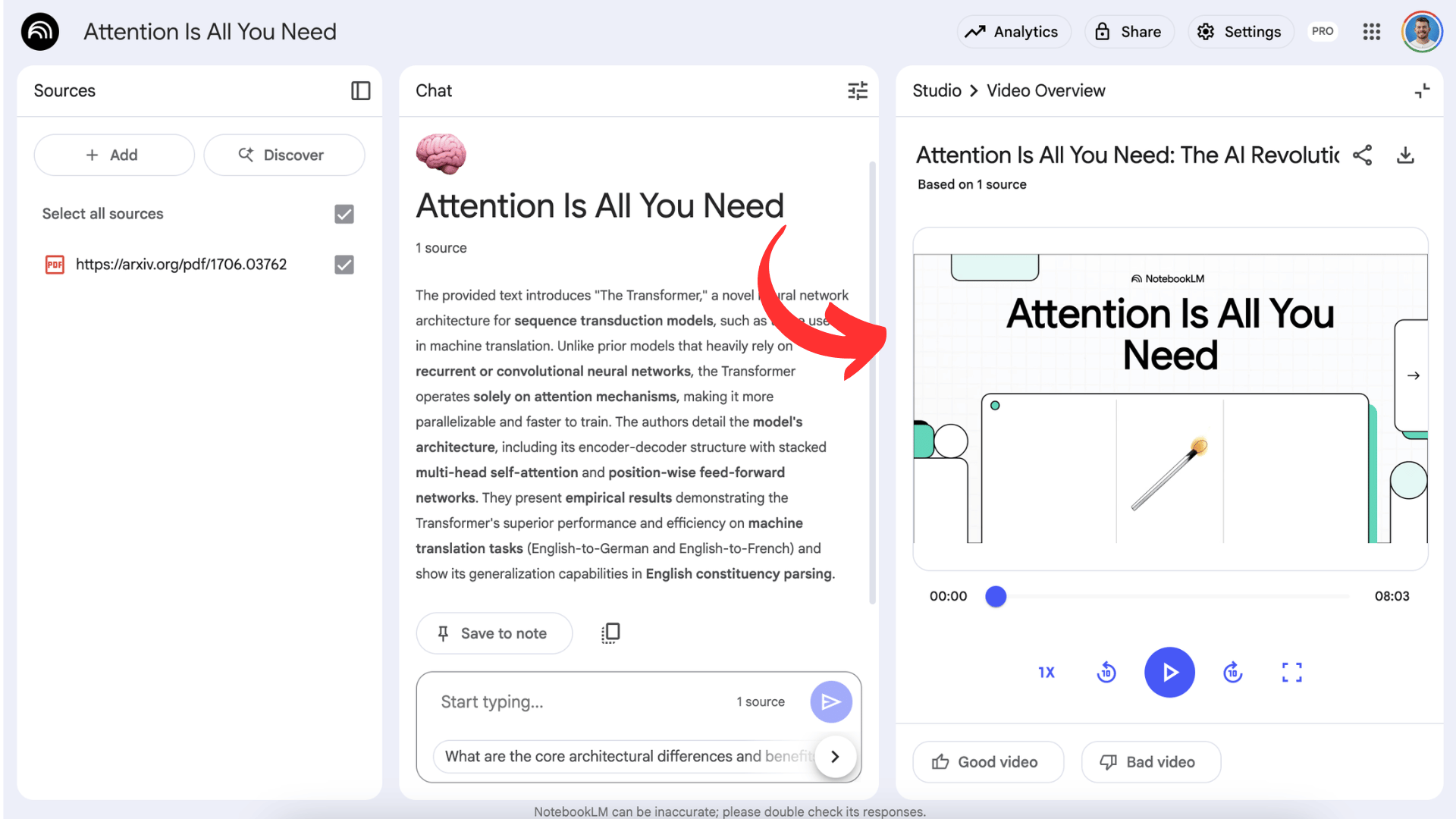

The Rundown: In this tutorial, you’ll learn how to use NotebookLM’s new ‘Video Overview’ feature to turn documents into AI-narrated video presentations with slides — pulling images, diagrams, quotes, and numbers directly from the content.

Step-by-step:

Visit NotebookLM, create a new notebook, and upload your documents

In the Studio panel on the right, click “Video Overview”

Optional: Click the three dots menu to customize your focus topics, target audience, or learning goals

Review your generated video and “Download” to save as MP4

Pro tip: Create multiple Video Overviews in one notebook, make versions for different audiences, or focus on different chapters of your content.

PRESENTED BY LOVART

🎨 Your next creative partner might not be human

The Rundown: Lovart has officially exited beta with its AI design platform built for visual collaboration. It features a creative reasoning agent who thinks with you, sources references, and builds brand systems in minutes. Designers say it feels more like working with a teammate than using a tool.

With Lovart, you can:

Use the new ChatCanvas feature to visually collaborate with your agent, iterate, and refine in real time through natural language.

Turn simple prompts into brand visuals, social content, videos, and even 3D models

Rely on a specialized agent to maintain style consistency across materials

Work faster with memory that learns how you design and adapts to your habits

Transform your creative process with the world's first design agent today.

ANTHROPIC

🤖 Anthropic releases Claude Opus 4.1

Image source: Anthropic

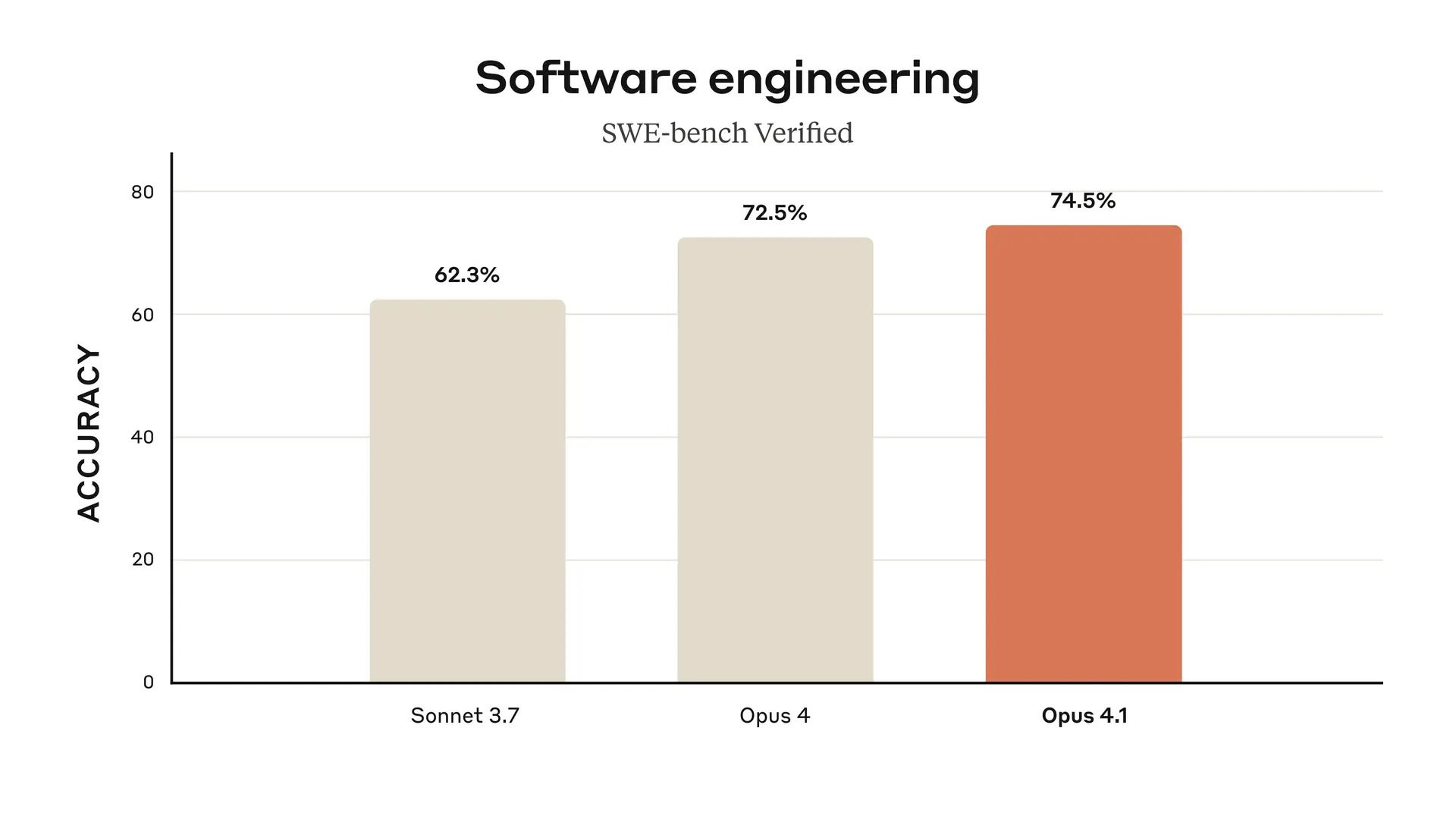

The Rundown: Anthropic released Claude Opus 4.1, an incremental upgrade for Opus 4, improving performance across real-world coding, in-depth research, and data analysis tasks — particularly those requiring attention to detail and agentic actions.

The details:

Opus 4.1 brings a notable coding upgrade over its predecessor, boosting performance on SWE-bench Verified from 72.5% to 74.5%.

Improvements are also seen across math, agentic terminal coding (TerminalBench), GPQA reasoning, and visual reasoning (MMMU) benchmarks.

Customers cited real-world gains with the model, saying it excels at tasks like multi-file code refactoring and identifying correlations in codebases.

Anthropic said the upgrade—available to paid users and businesses—marks the start of “substantially larger improvements” planned for its models.

Why it matters: With Opus 4.1, Anthropic is adding more momentum to what’s shaping up to be an exciting week for AI enthusiasts. The upgrades are a welcome gift, but with OpenAI’s GPT-5 potentially dropping any day, all eyes will be on how the company’s models hold its ground, especially in coding, where it has stood out.

QUICK HITS

🛠️ Trending AI Tools

🔄 Depot’s Claude Code Sessions - Persistent AI coding sessions that sync across teams & environments for seamless collaboration*

⚙️ Kaggle Game Arena - Benchmark to test LLMs on evolving strategic games

📽️ ChatGPT - OpenAI’s AI assistant, now with tools to detect mental distress

📝 Gemini Storybooks - Google’s AI now creates narrated storybooks

*Sponsored listing

💼 AI Job Opportunities

⚙️ The Rundown - Growth and Content Strategist

📢 Groq - Product Marketing Manager, Sales Enablement

🛠️ Figure AI - Prototype Development Technician

🎭 Meta - Creative Director

📰 Everything else in AI today

ElevenLabs introduced Eleven Music, its multilingual music generation model with control over genre, style, and structure, and the option to edit both sounds and lyrics.

Google added a new Storybook feature to the Gemini app, allowing users to generate personalized storybooks about anything with read-aloud narration for free.

Perplexity acquired Invisible, a company developing a multi-agent orchestration platform, to scale its Comet browser for consumer and enterprise users.

Elon Musk shared Grok’s Imagine image and video generator is seeing massive interest, with 20 million images generated yesterday alone.

Alibaba released its Flash series of Qwen3-Coder and Qwen3-2507 models via API, with up to 1M-token context window and low pricing.

Shopify added new agent-focused features, including a checkout kit to embed commerce widgets into agents, low-latency global product search, and a universal cart.

COMMUNITY

🎥 Join our next live workshop

Join our next workshop this Friday, August 8th, at 4 PM EST with Dr. Alvaro Cintas, The Rundown’s AI professor. By the end of the workshop, you’ll know hot to run your own fully private, open-weight model locally on your computer.

RSVP here. Not a member? Join The Rundown University on a 14-day free trial.

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer—the humans behind The Rundown

Stay Ahead on AI.

Join 2,000,000+ readers getting bite-size AI news updates straight to their inbox every morning with The Rundown AI newsletter. It's 100% free.